Contents

So, I wanted to be able to automate tasks in my homelab with Terraform and Ansible without ever having to git clone or having the binaries locally. Another thing I hated was dealing with Terraform state files. I use various machines and not having the state file can be a pain if you wanted to update or destroy a Terraform deployed host. There have been a couple of projects like Gaia that handle state files and Terraform deployment but it has died like many similar projects. You can use a remote state file provider but most of those require enterprise/cloud solutions like S3 and running Minio for just state files feels clunky. Very few options are even available for homelab/self-hosted use.

Originally, I deployed Semaphore for this task. The project recently added Terraform support and has the ability to accept inputs for pipelines. But I was running into issues. Although workspaces were in the UI, the inventory-workspace selection on execution was ignored. The only way to change the workspace was to modify the task each time and select it after creating it on a separate screen. With Terraform workspaces, I could create a workspace for each machine deployed and the state file would live in the workspace folder. I also abhor paywalls and there were a couple of features (and future fixes) hidden behind their license. Eventually I realized that I already had something better than Semaphore, Gitea!

If you don’t have Gitea installed, it may be the best single binary web git installation for the homelab. Outside of just being a web interface for git repositories, it has wiki support, Issues, projects, organizations, package registry(more on this later) and they somewhat recently added Actions, fully compatible with Github Actions. Some of it can’t be configured in the GUI which may be off putting to some but is well worth learning.

Gitea Actions

Actions are basically a more condensed and less complicated version of Jenkins. You setup workflows (ex. Deploy Virtual Machine) that can contain various tasks (ex. Git Clone, Terraform Deploy) with confirmations that they were successful or failed. There are various ways to kickoff workflows, logic can be added to tasks to fork execution and there are SO many public extensions/integrations that simplify your workflows. You can also setup inputs that can be requested when the workflow is executed!

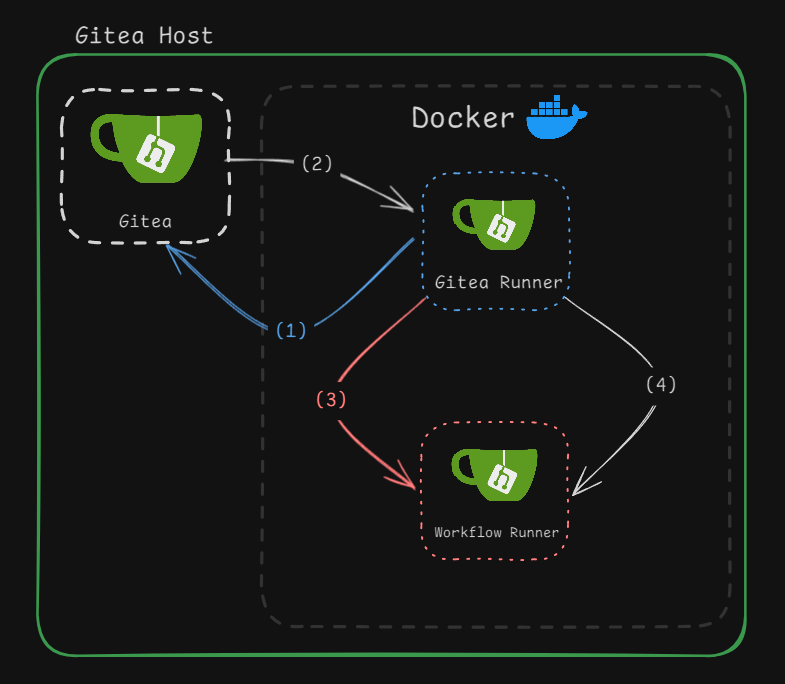

So how do they work? Out of the box, Gitea is pretty self contained single go binary. Installing it is as simple as wget then copying the binary to /usr/bin. If you want to run it as a service, create a systemd service file, enable it and start. Gitea Actions requires a few more moving parts. Once you have a Gitea Runner installed, all you need is Docker. In my setup, I have the Gitea Runner also running in docker. Once a Gitea runner is registered with the Gitea instance, it can accept requests from Gitea that will launch a container to run your workflow in.

(1) The Gitea Runner registers with the Gitea Instance

(2) When a workflow needs to kickoff, Gitea will have the runner spawn a new workflow container.

(3) The Gitea Runner launches a Workflow container based on settings in it’s config files.

(4) Task are run inside the Workflow container and responses are returned.

As long as your workflow has a “runs-on” tag and the runner has a configuration for said tag, the entire process is automated.

How I Setup Terraform with Gitea Actions

In my case, I had a preexisting Terraform file that allows me to deploy hosts to Proxmox. My Terraform file for Proxmox uses the bpg/proxmox provider to clone a vm base image then use cloud init for the basic setup like resizing the disk, setting memory/cpu, setting hostname, tags and notes. I would enter my variables into a tfvars file, set my secrets in either a .env file or export the environement variables and then I would terraform plan and terraform deploy. My terraform state files would be in a “terraform.state” file locally and I would have to move it myself to a NAS to keep track of it unless I wanted to use one of the cloud providers.

# Homelab Terraform Variables # The name of the host hostname = "" # linux, windows_server, windows_desktop host_type = "linux" # in GB disk_size = "500" # in MB memsize = "2048" # how many cores? numcpu = "2" # Appears in the summary vm_notes = "" # default tag as TF for terraform vm_tags = ["", "TF"] # Which network segment? dmz, lab etc network = "dmz"

So how does Gitea / Gitea Actions make this better?

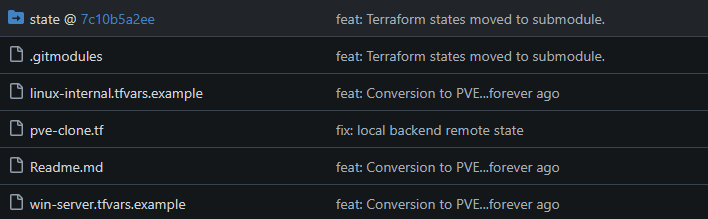

Although not Gitea specific, my main gripe about handling Terraform state files was solved with git submodules. Git submodules allow a folder to be a separate git repo. By making my state folder a submodule, I could commit that folder separate from the terraform code but still only required a single git-clone if I were to use the repo on other machines. This also served as a log of actions assuming I commited them. By adding a “local” backend to the terraform file, specifying the “workspace_dir” as “./state”, and setting my terraform workspace at runtime, all my state files would be stored in “./state/<workspace_name>/terraform.state”. I did not have to use a submodule here but doing so made the repo commits feel cleaner when used with gitea actions.

Once that was setup I could take the deep dive into Git Actions to solve the main issue, Git cloning EVER. Gitea actions is fully compatible with Github actions so it helps to just look for documentation on Github actions. There are so many extensions that will be automatically downloaded when specified with the “uses” tag. There are some cases where I felt like the extension was useless rather than just running the appropriate command in bash.

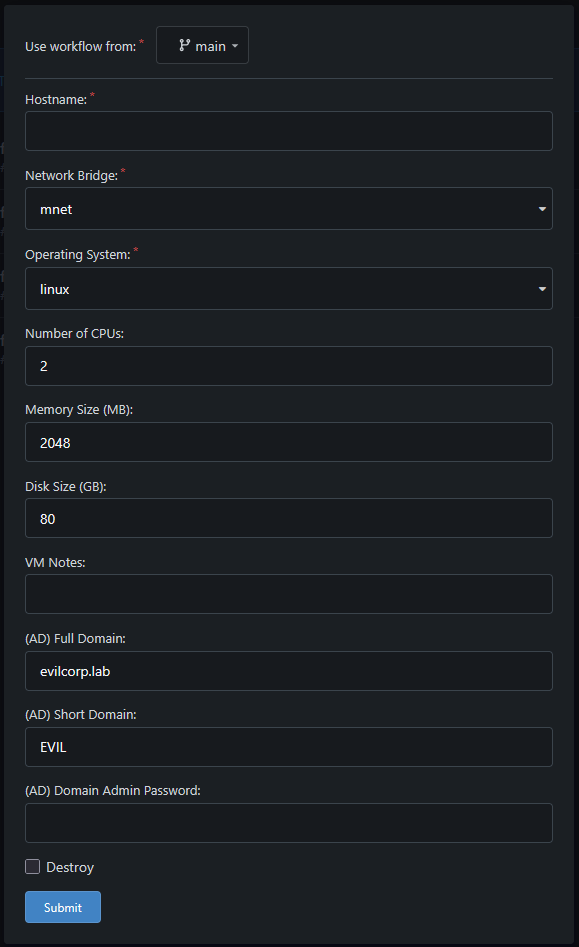

Rather than filling out a Terraform variables file, all I have to do is enter a Hostname when I click on the “Run Workflow” button within the Gitea gui.

If I need to tear down the host, I also check the “Destroy” flag at the bottom and ensure the Hostname is set correctly.

This setup also allows me to use Gitea Secrets to store sensitive API keys and passwords rather than having them in a .env file or exporting the environment variables.

Below is the top portion of my clone workflow that produces the popup box inputs. The “on” tag indicates how the workflow will execute. This value can be several things such as cron schedules, or git pull events. You can also trigger them on multiple events. The “workflow_dispatch” tag is what allows the workflow to be executed via button press.

PVE Clone Workflow YAML

name: PVE Clone Deploy

on:

workflow_dispatch:

inputs:

hostname:

description: 'Hostname'

required: true

type: string

network:

description: 'Network Bridge'

required: true

default: 'mnet'

type: choice

options:

- mnet

- dmz

- lab

host_type:

description: 'Operating System'

required: true

default: 'linux'

type: choice

options:

- linux

- win_server

- win_desktop

numcpu:

description: 'Number of CPUs'

required: false

default: '2'

memsize:

description: 'Memory Size (MB)'

required: false

default: '2048'

disk_size:

description: 'Disk Size (GB)'

required: false

default: '80'

vm_notes:

description: 'VM Notes'

required: false

default: ''

full_domain:

description: '(AD) Full Domain'

required: false

type: string

default: 'evilcorp.lab'

short_domain:

description: '(AD) Short Domain'

required: false

type: string

default: 'EVIL'

new_admin_pass:

description: '(AD) Domain Admin Password'

required: false

default: ''

should_destroy:

description: 'Destroy'

required: false

type: boolean

default: 'false'

Outside of that the workflow is basically what you would normally input on the command line:

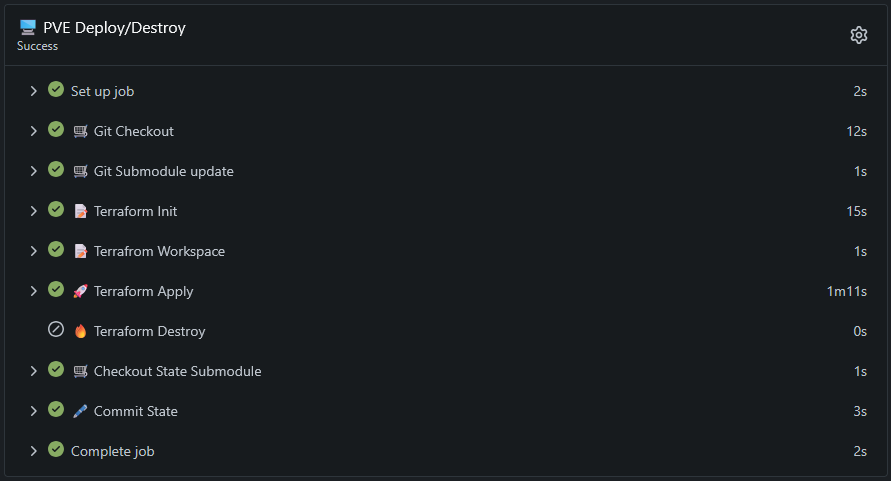

1. Git Checkout your terraform repository

2. Update my state submodules

3. Initialize Terraform

4. Set your Terraform workspace

5. If the should_destroy flag is NOT set, Terraform Apply! otherwise, skip this step.

6. If the should_destroy flag IS set, Terraform Destroy! otherwise, skip this step.

7. Checkout the main branch of the state submodule

8. Commit to the state repo with a deploy/destroy commit message containing the hostname.

Below is the remainder of the YAML for the deployment. This is probably not a copy-pasta for most people and will result in a failure due to not having the appropriate packages. In this final version, I do not Apt-Install anything, nor do I have to mess with certificates of my self-signed TLS because I am also rolling my own Image.

PVE Clone Workflow YAML Continued

jobs:

deploy:

runs-on: ubuntu-latest

name: "🖥️ PVE Deploy/Destroy"

steps:

- name: 🛒 Git Checkout

uses: actions/checkout@v3

with:

token: ${{ secrets.GIT_RUNNER_TOKEN }}

submodules: true

fetch-depth: 0

- name: 🛒 Git Submodule update

run: |

git submodule init

git submodule update --remote --merge

- name: 📝 Terraform Init

run: |

terraform init

- name: 📝 Terrafrom Workspace

run: |

terraform workspace select -or-create=true ${{ inputs.hostname }}

- name: 🚀 Terraform Apply

if: ${{ inputs.should_destroy == false }}

run: |

echo "🚀 Terraform Apply"

terraform apply -auto-approve \

-var="hostname=${{ inputs.hostname }}" \

-var="network=${{ inputs.network }}" \

-var="host_type=${{ inputs.host_type }}" \

-var="numcpu=${{ inputs.numcpu }}" \

-var="memsize=${{ inputs.memsize }}" \

-var="disk_size=${{ inputs.disk_size }}" \

-var="vm_notes=${{ inputs.vm_notes }}" \

-var="full_domain=${{ inputs.full_domain }}" \

-var="short_domain=${{ inputs.short_domain }}" \

-var="new_admin_pass=${{ inputs.new_admin_pass }}" \

-var='vm_tags=["TF"]' \

-var="teleport_server=jump.home.lab" \

-var="pve_host=https://pve.home.lab:8006/api2/json" \

-var="default_pass=${{ secrets.DEFAULT_PASS }}" \

-var="pve_token=${{ secrets.PVE_TOKEN }}" \

-var="teleport_auth_token=${{ secrets.TELEPORT_AUTH_TOKEN }}"

- name: 🔥 Terraform Destroy

if: ${{ inputs.should_destroy == true }}

run: |

echo "🔥 Terraform Destroy"

terraform destroy -auto-approve \

-var="hostname=${{ inputs.hostname }}" \

-var="network=${{ inputs.network }}" \

-var="host_type=${{ inputs.host_type }}" \

-var="vm_notes=${{ inputs.vm_notes }}" \

-var="teleport_server=jump.home.lab" \

-var="pve_host=https://pve.home.lab:8006/api2/json" \

-var="default_pass=${{ secrets.DEFAULT_PASS }}" \

-var="pve_token=${{ secrets.PVE_TOKEN }}" \

-var="teleport_auth_token=${{ secrets.TELEPORT_AUTH_TOKEN }}"

- name: 🛒 Checkout State Submodule

run: |

cd ./state

echo "🔃 $(pwd)"

git checkout main

cd -

- name: 🖋️ Commit State

uses: EndBug/add-and-commit@v9

with:

cwd: 'state'

add: '.'

author_name: GiteaRunner

author_email: runner@git.home.lab

message: >

${{ inputs.should_destroy == true && format('destroy: {0}', inputs.hostname) || format('deploy: {0}', inputs.hostname) }}

push: true

Taking it a Step Further

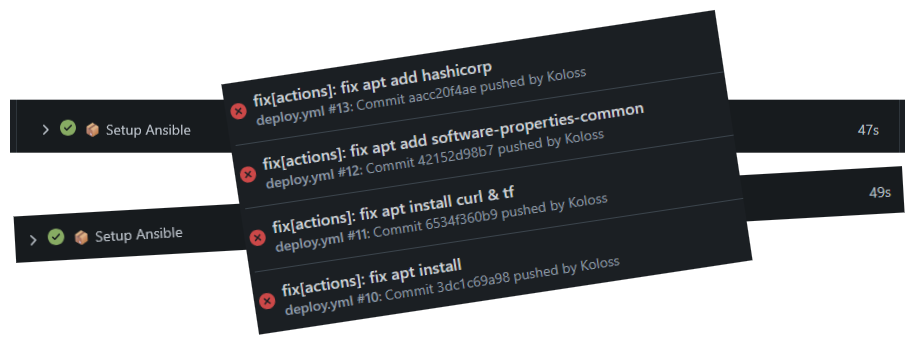

After over 75 commits, I finally had a working PVE deployment workflow with emoji bedazzling. I no longer have to git-clone to deploy machines, mess with terraform state files or login to proxmox to clone machines. I also setup ansible which is basically the same process but I was running into some issues. Apt installing things could sometimes take FOREVER. The average time was around 45 seconds but removing that step cut most playbook run times in half.

In order to remove that step I ended setting up my own Dockerfile. Rather than pushing the image to docker-hub, Gitea serves as a private registry without any modifications to the server or docker deploy outside of specifying the hostname for my local gitea instance.

# Homelab Gitea Runner Dockerfile FROM ubuntu:latest ADD certs/homelab_internal.crt /usr/local/share/ca-certificates/homelab.crt RUN chmod 644 /usr/local/share/ca-certificates/homelab.crt ENV DEBIAN_FRONTEND=noninteractive TZ=America/Chicago RUN apt-get update -y RUN apt-get install -y curl ca-certificates wget gpg RUN apt-get upgrade -y RUN wget -O - https://apt.releases.hashicorp.com/gpg | gpg --dearmor -o /usr/share/keyrings/hashicorp-archive-keyring.gpg RUN echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] https://apt.releases.hashicorp.com bookworm main" | tee /etc/apt/sources.list.d/hashicorp.list RUN apt-get update -y RUN apt-get install -y nodejs golang-go ansible terraform docker-compose RUN update-ca-certificates

Since I was already on the gitea automation kick, I utilized another action workflow that kicks off on a “push” to the base git repository. The workflow will setup docker, build the image and push the image to the gitea instance making all new versions available to itself. In every instance after the initial push and update to the configuration files, the runner was basically building a better version of itself each time.

name: Gitea Docker Build & Push

on:

push:

jobs:

build:

runs-on: ubuntu-latest

name: "Gitea Docker Build & Push"

steps:

- name: 🛒 Git Checkout

uses: actions/checkout@v3

with:

token: ${{ secrets.GIT_RUNNER_TOKEN }}

- name: 📦 Setup Docker

run: |

apt-get update -y

apt-get install -y docker-compose

- name: ⚓ Docker Build

run: |

docker build ./ -t git.home.lab/homelab/ubuntu:latest

- name: 🚀 Docker Push

env:

CR_PAT: ${{ secrets.GIT_RUNNER_TOKEN }}

run: |

echo $CR_PAT | docker login git.home.lab -u user --password-stdin

docker push git.home.lab/homelab/ubuntu:latest